We've had over a decade of software eating the world and embedding itself within all forms of our daily lives. AI is obviously going to drastically reduce the current expertise required to build software. While we've seen tools like Webflow or Squarespace enable anyone to create websites, I'm thinking about a change that will affect all forms of software.

Context

Back in 2016 I noticed that every consumer app had to be built 3 times across iOS, Android and Web despite having the same features. That always seemed really stupid to me and was a reason I bet on React Native and Expo to fix this. I want to write my software once, and it should be smart enough to run anywhere.

I've been writing apps since then using these tools and now in 2023 I think cross-platform tools have enough traction to "have won". So now it's time to think about what's next. What's the next tool to dramatically speed up creating software?

I gave a conference talk on 12th May at app.js and finished with ideas of how AI is going to change how we build software.

This post is a part 2 to my talk, it imagines a future where AI can write entire programs.

A redistribution of the responsibilities of the software engineer

Background

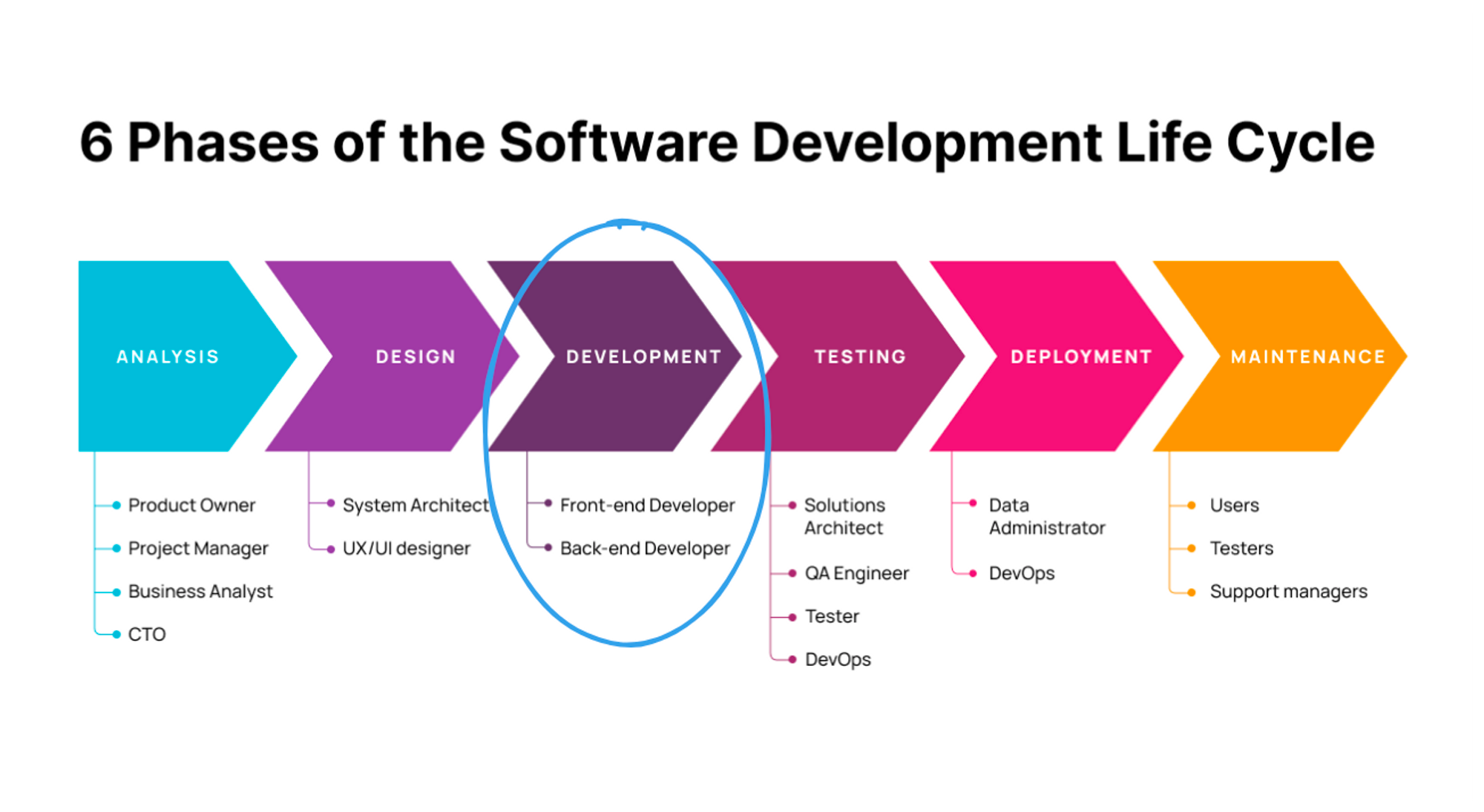

Software today is built with a long and expensive phase of “development” - today software engineers can spend months, or even years to figure out what code needs to be written given some design or product specification.

We’ve seen developer tools for AI like GitHub Copilot be extremely successful, and I’ve seen software engineers from the junior to senior level use ChatGPT for coding help. What comes next? The amount of AI I can use to write code is growing exponentially.

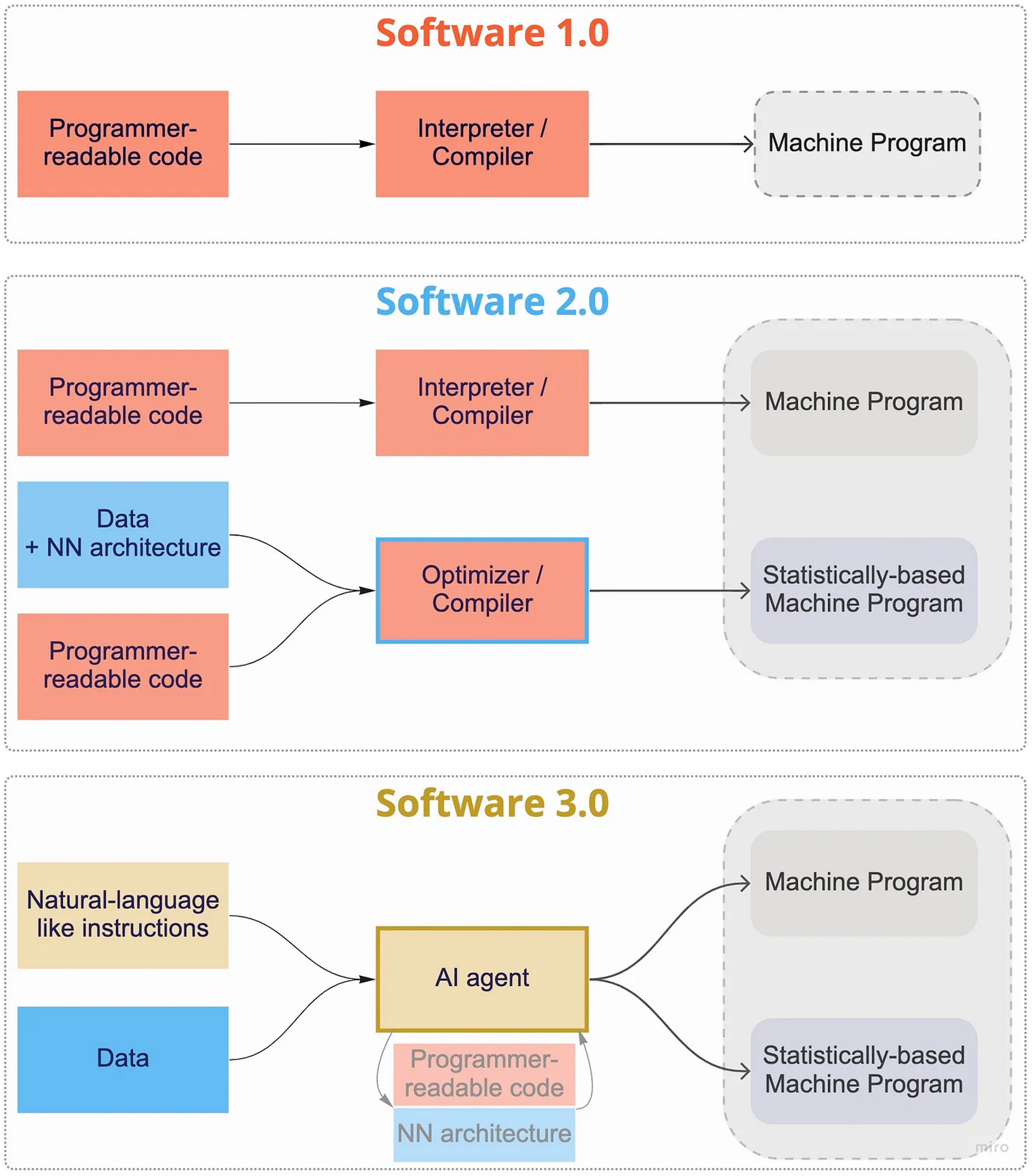

My "new bet" is that over the next decade natural language will supplant programming languages as the way software is written over the next decade. With a rapid increase in efficiency in software development, we’re going to see a redistribution of the responsibilities of software engineers and a change in the way more software is designed and tested.

Text to product (whole program synthesis)

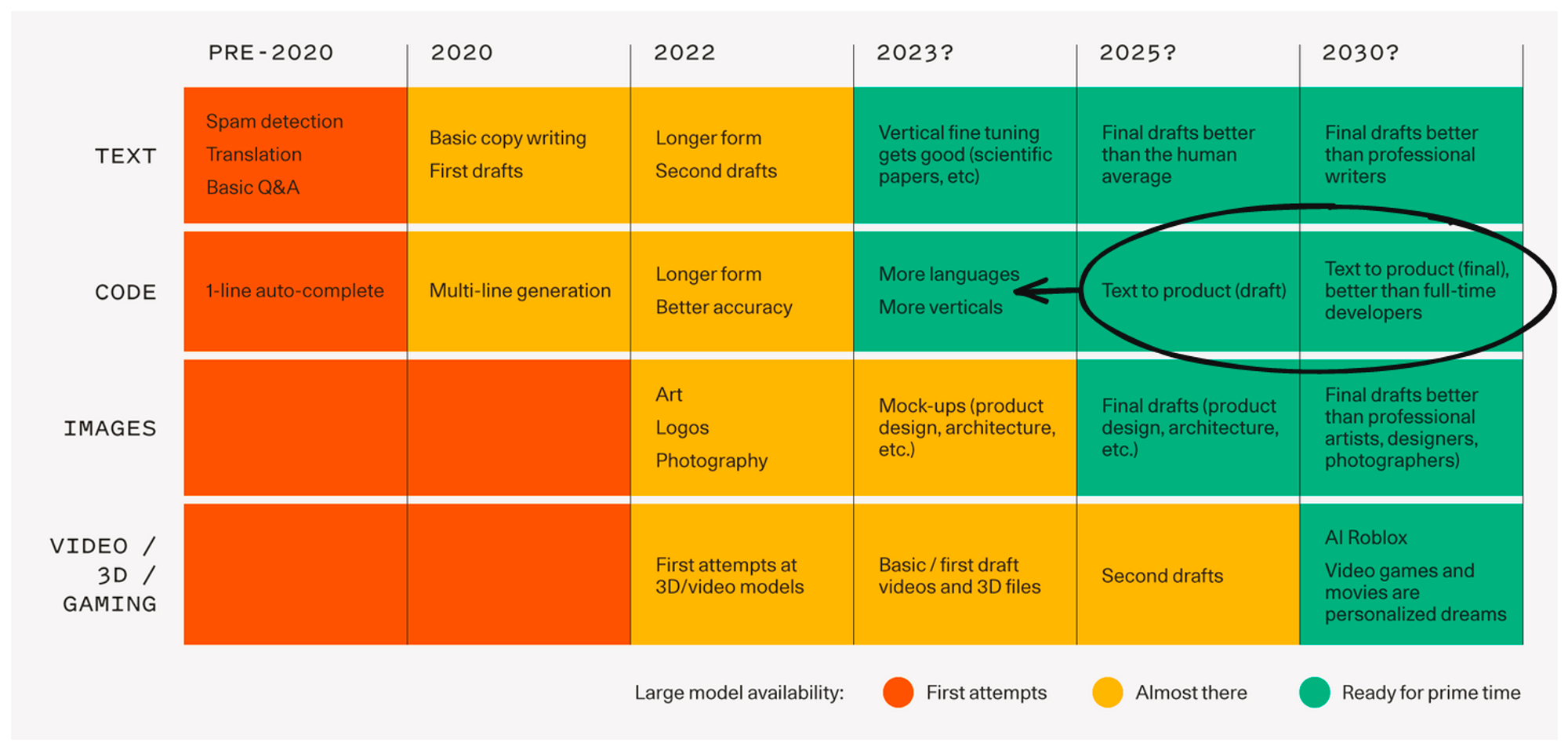

Sequoia’s generative AI market map from September 2022 thought text to product was 2025-2030. But it’s emerging today.

The limiting factor right now in generative AI is the "context window" - i.e the amount of code you can input which is often useful when giving context about what output you need. But larger context windows are coming. On April 19th a research paper "Scaling Transformer to 1M tokens and beyond with RMT” suggests it’s possible to feed in a detailed specifications/tests to and generate the code for a program. On May 11th Anthropic releases 100K Context Windows “With 100K context windows, you can: Rapidly prototype by dropping an entire codebase into the context and intelligently build on or modify it”.

Thinking ahead, if the context window problem is solved, and AI agents are able to continually suggest changes to the existing codebase, we're going to need new tools to make use of these technologies.

Early tools I've spotted in this space are LangChain, e2b, Second.dev, relevance.ai and yeager.ai. Important papers in this space are Generative Agents: Interactive Simulacra of Human Behavior and ReAct: Synergising Reasoning and Acting in LLMs.

Why is this going to happen quicker than we might expect?

Coding is a perfect usecase for generative AI because:

- It's well structured following a specific grammar, that means it's actually easier to generate than natural language which has less structure and a lot more nuance

- The emergence of open-sourcing through tools like GitHub mean that there's an always-growing, always-current training set that models can train on.

- It's also really easy to provide real life human feedback, if you don't like the code that's been generated it might be invalid, or not used by the person requesting it. There's a lot of "signalling" feedback that can be used to improve the quality of the generation here.

We’re just doing what we’ve always done: making things easier

Software Engineers have always simplified complexity or things we simply didn’t like doing. Developers have moved from punch cards and Fortran to using languages that feel more and more natural. The popularity of Python shows this - programmers prefer to write programs that are easy to read and similar to natural language.

But, you still need to be able to describe the requirements precisely, and you'll still need to test it. That leads me to believe we're going to see a big shift in the emphasis away from development and into requirements/testing.

Software 3.0 and the importance of testing

Software 3.0 is quickly emerging as the model of how software will be built from this year and onwards. Software engineering won’t go extinct, but the demand for software engineers will quickly reduce and the skill of reviewing/testing will be vitally important.

From a blog post from CEO of CodiumAI

Testing tools — Will become ultra-smart, automated and informative. This will help to greatly remediate the way that manual testing is done today ($10B+ market?). This is more than “nice to have”, it will become a necessity to develop intelligent testing tools to cope with the sophistication that SW 3.0 will enable.

Software 3.0 — the era of intelligent software development

Additional early-stage software is already being written here. Guardrails AI is “is a Python package that lets a user add structure, type and quality guarantees to the outputs of large language models (LLMs)”.

So while a developer might use something like LangChain to develop an AI agent to write software, it will use Guardrails to ensure that for an AI input there is some expected output.

Regulation

Software engineering 3.0 will have a component of regulation. Today testing software is necessary for important software such as that in airplanes, I imagine we will see something similar for AI models. We’re already seeing the EU draft regulation on AI, it’s just a matter now of “when not if”.

From Sam Altman’s speech in the Senate on what regulation might come:

For example, the US government might consider a combination of licensing and testing requirements for development and release of AI models above a threshold of capabilities.

However regulating will have problems.

How do we define a “threshold of capabilities” when the goal posts of those capabilities are moving so quickly? AI models are coming in all shapes and sizes, I can today run RedPajama (they just raised seed) on my laptop and is open source, are we heading to a future where all software will have a component of regulation?

This part of the famous We Have No Moat, And Neither Does OpenAI leaked post from Google is relevant:

Indeed, in terms of engineer-hours, the pace of improvement from these models vastly outstrips what we can do with our largest variants, and the best are already largely indistinguishable from ChatGPT. Focusing on maintaining some of the largest models on the planet actually puts us at a disadvantage.

Since most of the text on the Internet is open and accessible, the training set is available to be scraped by everyone and open-first organisations can emerge to provide models and alignment. How do you regulate something extremely powerful but seemingly so accessible?

Banning open-source models would favour the most powerful AI models to being run by the largest organisations and that could be restrictive for individuals looking for AIs that run on-device and respect a user’s privacy.

Conclusion

- Software is now writing software, and this will lead to an extremely quick redistribution of skills across software engineering.

- Software Engineering by humans will be mainly about reviewing and testing the output of AI.

- I expect new startups to emerge, building developer tools that provide a great experience for writing Software 3.0.